Whisper based speech recognition server#

In the last blog post: Token authenticated aiohttp server, an authenticated aiohttp server was introduced. In this post, we build on top of the previous posts, to create an Automatic Speech Recognition (ASR) authenticated server, that uses the newly introduced whisper by OpenAI.

Speech recognition implementation#

There are different approaches to implement this. The choice here is arbitrary, I chose whisper since it is accurate and simple to integrate. One way to implement this is to use the whisper transcribe function. This can be done as follows:

1 import io

2 import sys

3 import time

4 import asyncio

5 import logging

6 import whisper

7 import subprocess

8 import soundfile as sf

9

10

11 # load model

12 model = whisper.load_model("base")

13

14 async def stt(request):

15 """

16 Speech to text routine to transcribe incoming wave audio and deliver

17 the resulting text in return.

18

19 Args:

20 request.

21

22 Returns:

23 Json response.

24 """

25 response = {}

26 stime = time.time()

27 bytes_data = await request.content.read()

28 logger.debug("size of received bytes data: " + str(sys.getsizeof(bytes_data)) + " bytes")

29

30 try:

31 # save temp file

32 audio, fs = sf.read(io.BytesIO(bytes_data), dtype="float32")

33 result = model.transcribe(audio=audio)

34 texts = result['text']

35 tproc = round(time.time() - stime, 3)

36 response = {"text": ' '.join(texts), "proc_duration": tproc, "status": "success"}

37

38 except Exception as e:

39 response = {"Error": str(e), "status": "failed"}

40 logger.error("-> Errror :" + str(e))

41

42 return web.json_response(response)

The config#

We will be using the same config specified in Token authenticated aiohttp server/config Note the allowed_tokens sections, that can be replaced by your own server allowed tokens.

Code#

The previously listed steps, should look together in Python as follows:

1import io

2import sys

3import json

4import time

5import asyncio

6import logging

7import whisper

8import subprocess

9import soundfile as sf

10from aiohttp import web

11from typing import Callable, Coroutine, Tuple

12

13

14# init loggging

15logger = logging.getLogger(__name__)

16logging.basicConfig(format="[%(asctime)s.%(msecs)03d] p%(process)s {%(pathname)s: %(funcName)s: %(lineno)d}: %(levelname)s: %(message)s", datefmt="%Y-%m-%d %p %I:%M:%S")

17logger.setLevel(10)

18

19# load model

20model = whisper.load_model("base")

21

22# parse conf

23with open("config.json", "rb") as config_file:

24 conf = json.loads(config_file.read())

25

26def ping(request):

27 logger.debug("-> Received PING")

28 response = web.json_response({"text": "pong", "status": "success"})

29 return response

30

31async def stt(request):

32 """

33 Speech to text routine to transcribe incoming wave audio and deliver

34 the resulting text in return.

35

36 Args:

37 request.

38

39 Returns:

40 Json response.

41 """

42 response = {}

43 stime = time.time()

44 bytes_data = await request.content.read()

45 logger.debug("size of received bytes data: " + str(sys.getsizeof(bytes_data)) + " bytes")

46

47 try:

48 # save temp file

49 audio, fs = sf.read(io.BytesIO(bytes_data), dtype="float32")

50 result = model.transcribe(audio=audio)

51 texts = result['text']

52 tproc = round(time.time() - stime, 3)

53 response = {"text": ' '.join(texts), "proc_duration": tproc, "status": "success"}

54

55 except Exception as e:

56 response = {"Error": str(e), "status": "failed"}

57 logger.error("-> Errror :" + str(e))

58

59 return web.json_response(response)

60

61def token_auth_middleware(user_loader: Callable,

62 request_property: str = 'user',

63 auth_scheme: str = 'Token',

64 exclude_routes: Tuple = tuple(),

65 exclude_methods: Tuple = tuple()) -> Coroutine:

66 """

67 Checks a auth token and adds a user from user_loader in request.

68 """

69 @web.middleware

70 async def middleware(request, handler):

71 try : scheme, token = request.headers['Authorization'].strip().split(' ')

72 except KeyError : raise web.HTTPUnauthorized(reason='Missing authorization header',)

73 except ValueError : raise web.HTTPForbidden(reason='Invalid authorization header',)

74

75 if auth_scheme.lower() != scheme.lower():

76 raise web.HTTPForbidden(reason='Invalid token scheme',)

77

78 user = await user_loader(token)

79 if user : request[request_property] = user

80 else : raise web.HTTPForbidden(reason='Token doesn\'t exist')

81 return await handler(request)

82 return middleware

83

84async def init():

85 """

86 Init web application.

87 """

88 async def user_loader(token: str):

89 user = {'uuid': 'fake-uuid'} if token in conf["server"]["http"]["allowed_tokens"] else None

90 return user

91

92 app = web.Application(client_max_size=conf["server"]["http"]["request_max_size"],

93 middlewares=[token_auth_middleware(user_loader)])

94

95 app.router.add_route('GET', '/ping', ping)

96 app.router.add_route('GET', '/stt', stt)

97 return app

98

99if __name__ == '__main__':

100 web.run_app(init(),)

Testing#

The previous code when executed will start a server running on http://localhost:8080/.

To test your server, you can use curl curl -H 'Authorization: Token token1' http://localhost:8080/ping (note that you will need to pass a token as specified in the config and code).

As for the transcription function, this can be tested by passing the audio file to transcribe using curl -X POST --data-binary @WAVEFILENAME.wav http://127.0.0.1:8080/stt.

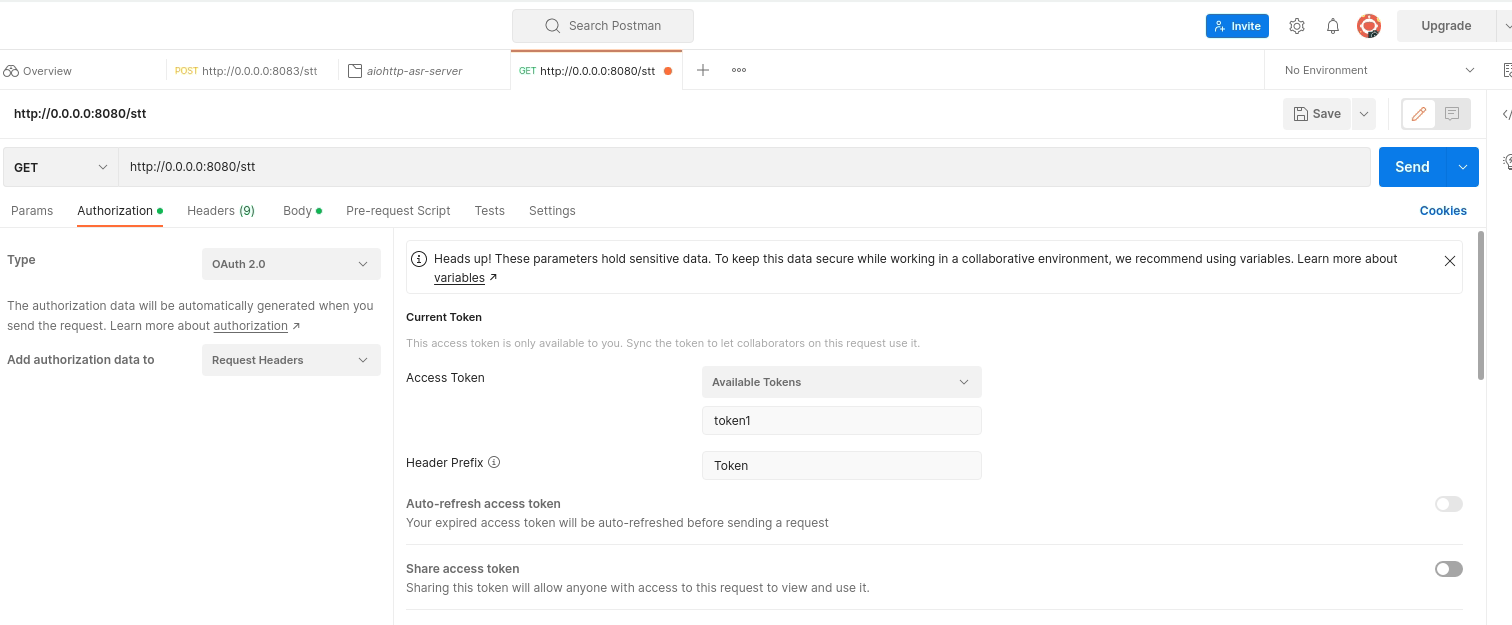

Alternatively, you can use Postman, which is a tool for developers to design, build and test their APIs.

For this, you will need to create you GET request, specify the API/server link, add your wave file to transcribe to the body (as binary) and you will need to specify your token in the headers.

This can be done under the authorization tab, choose OAuth 2.0 as type, then set the "Access Token" to an allowed token (specify this in the config)

"Header Prefix" to Token (this is the same as defined in def token_auth_middleware(.. auth_scheme: str = 'Token'..)).

Figure 20: Postman authorization config#

The server reponses should look as follows:

Ping response: {"text": "pong", "status": "success"}

Stt response: {"text": "transcription", "proc_duration": 0.xxx , "status": "success"}

Conclusion#

This blog built on top of the previous posts (Basic aiohttp server and Token authenticated aiohttp server) to deliver an ASR authenticated server based on whisper.